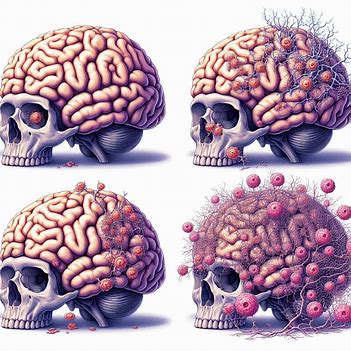

Do Our Brains Die As We Get Older?

reason is not neurons dying off.

I keep company with my 105-year-old mother-in-law. It is undeniable that her brain is failing her. In her case, the cause may be related to her failing heart, resulting in vascular dementia - brain failure caused by lack of blood supply.

So, while many regard this as a "scientific" fact, the idea that our "neurons are dying out" is a metaphor for declining brain function. It's a helpful metaphor, but we can come up with a better one.

We think of neurons somehow "connecting" the atomic version of "mind stuff," which somehow adds up to "us" - our experience of being in the world. That feeling - of being some continuing entity in the world is called our "remembered self". As we study how this "self" is constructed, we come to see the function of the brain is to compress and simplify this personal narrative we tell ourselves. This partly explains the miracle that a twenty-watt piece of meat can create the illusion of "remembering" 100 years of life.

The other explanation comes from one obvious way this miracle takes place.

While the total number of words in English is difficult to pinpoint precisely due to the dynamic nature of language, most major dictionaries contain several hundred thousand words, with estimates for the entire English lexicon ranging from around 600,000 to 1 million words when including technical and obscure terms. However, the core vocabulary used by the average English speaker is much smaller, around 20,000 to 35,000 words.

Large language models (LLMs) deal with tens of thousands of "tokens" (very roughly equivalent to "words") and millions or billions of "parameters" (roughly equivalent to synapses - connections between words). They do a decent job of imitating human thought.

This leads us to the "better metaphor." As we learn the language, we "plug in" to the LM, which is the actual language model of our world. Each word we know is "borrowed" from this LM and its "meaning," which connects it to hundreds of other words. As we get older, the LM helps us "think."

As we age, our "connection" to the LM becomes inefficient. We struggle to find the right word. At the same time, our "meat" memory system begins to lose track of the past. We forget big chunks of the past as the brain's ability to compress and simplify is overwhelmed. But still, even at 105, we can "connect" to the LM and carry on an intelligible conversation. We remember the words, but their meaning (the connection to our past) slowly disappears.

Inevitably, we die. What is left of us lives on in the LM. Our personal narrative dies with us - sometimes compressed down to almost nothing if we are lucky enough to live long enough. Near the end, we struggle to remember the faces of our loved ones or the time of day. Our narrative becomes part of the narrative of those who knew us, slowly becoming compressed away to a vaguely remembered name and date, then flickering out entirely.

Our metaphor should not be dying neurons. Instead, we should think of our remembered self as turning from a novel into a pamphlet. On the other hand, whatever we were ever able to say or understand lives on in the language we started to borrow when we were toddlers.

We can do nothing to prevent this process, but during life, we can expand our "remembered self" to a vast degree by expanding our vocabulary. Our words can become heavy with meaning, richly connected to our experience and other words. We can become storytellers. We can become wise. Ultimately, our wisdom (such as it is) lives on in the borrowed words we speak.

More generally, language models contain whole ideas. They refer to physical objects such as "art." We make language structures such as "real" novels. Our crude LLMs absorb the world, not just words.

While LLMs may only pretend to "think," they are perhaps a better model of the mind than one based on a few million dying neurons in a deteriorating meat memory machine.

Comments

Post a Comment